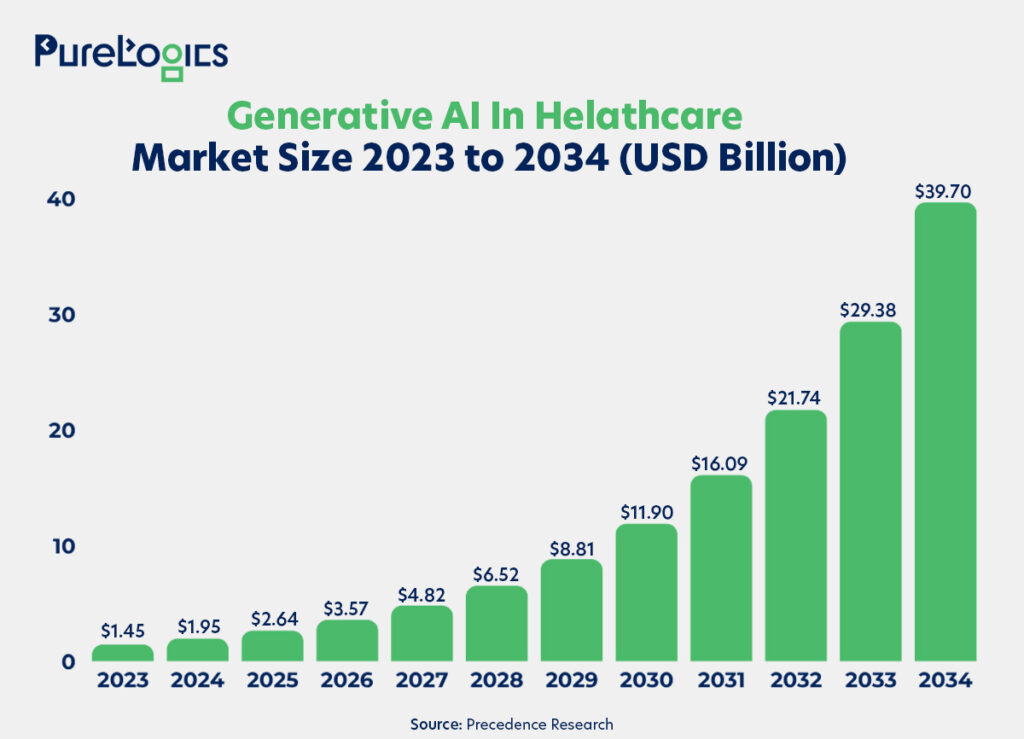

Generative AI in healthcare is set to move deeper into care delivery and operations, and market momentum reflects this shift. The Precedence Research study, based on industry publications, company reports, and analysis of market drivers such as investment trends and AI adoption, indicates that the gen AI market in healthcare will reach $40 billion by 2034.

Besides the McKinsey & Company survey of US healthcare leaders found that generative AI is perceived to deliver the greatest value in three major areas:

- 73% believe that gen AI can increase clinical/clinician productivity.

- 60% believe gen AI can improve administrative efficiency.

- 62% say gen AI can enhance patient engagement.

Plus, Deloitte’s Life Sciences and Healthcare Generative AI Outlook Survey also found that 75% of leading healthcare companies are experimenting with gen AI, suggesting that healthcare leaders who are not experimenting are at high risk of falling behind. However, adopting it strategically can give them a competitive edge. Below are some generative AI trends in healthcare that can help narrow down preferences for those willing to adopt them.

The Future of Generative AI in Healthcare: Trends to Watch in 2026

1. Administrative & Clinical Workflow Automation

Hospitals are adopting Gen AI for automating tasks such as clinical documentation, discharge summaries, and billing notes. Moreover, AI copilots trained on large language models (LLMs) can transcribe doctors’ and patients’ conversations and generate SOAP-style (Subjective, Objective, Assessment, and Plan) notes in seconds. Due to proven efficiency, this trend will continue. Earlier this year, a survey from Healthcare Dive and Microsoft, which polled healthcare executives, indicated that specific AI use cases could see a dramatic increase from now to 2026. Particularly, clinical documentation is a focus, as 42% of the executives said they planned to implement the AI tool. However, maintaining data accuracy, privacy, and clear audit trails can be a challenge.

2. Multimodal & Personalized Diagnosis

2026 will witness the rise of multimodal AI systems that integrate text, images, and structured data to create richer diagnostic insights. For example, combined MRI images, lab results, and genomic data can help predict patient-specific responses to treatment. Additionally, generative models are used to produce synthetic medical images, safely augmenting limited datasets for rare diseases and speeding up AI research without privacy risks. Emerging multimodal AI systems, such as Google DeepMind’s Med-PaLM M, IBM Watson Genomics, and PathAI, combine text, imaging, and molecular data to provide richer, patient-specific diagnostic insights.

However, their code, training data, and model parameters remain proprietary, making them difficult to adapt for specific institutional workflows or compliance standards. Hospitals and research organizations are therefore increasingly turning toward custom healthcare software development, building multimodal systems tailored to their internal data ecosystems, governance policies, and unique operational needs.

Deliver Truly Personalized Patient Care

Leverage the power of AI solutions that integrate imaging, lab results, and genomic data to develop smarter, patient-specific diagnostics.

3. Smart Hospitals Operations & Edge AI

Generative AI in healthcare optimizes patient flow, predicts bed occupancy, and dynamically manages staff workloads. Meanwhile, edge AI models running on local devices allow continuous patient monitoring without sending all data to the cloud. This is especially useful for remote care models, especially in chronic disease management. Moreover, this trend will continue, but this requires robust infrastructure and interoperability standards.

4. Drug Discovery & Synthetic Data Acceleration

Pharma companies are leaning on Gen AI to decrease the drug discovery timelines. These models can generate new molecular structures, predict compound effectiveness, and initiate early-stage trials by using synthetic data. Additionally, the study by MediTech Insights titled the Global Generative Artificial Intelligence in Healthcare Market, based on primary research (interviews with healthcare providers, biotech firms, and AI vendors) and secondary data analysis from industry publications and company reports, indicated that gen AI in healthcare will grow at 36-38% compound annual growth rate (CAGR) between 2024 and 2029. In fact, big names such as Insilico Medicine, Pharma.AI, Benevolent AI, and Atomwise use generative AI models to design new molecules and predict compound-target interactions. Additionally, the Microsoft BioGPT and NVIDIA BioNeMo frameworks accelerate pre-clinical data synthesis, drug design simulations, and shorten early discovery cycles.

5. Governance, Ethics and Trust

As generative AI moves deeper into clinical settings, governance frameworks are catching up, and initiatives like FUTURE-AI emphasize fairness, explainability, and traceability for trustworthy medical AI. Although federated learning, which allows institutions to train AI on decentralized data without direct sharing, will gain traction as a privacy-first approach, it faces implementation challenges.

Together, these trends show that generative AI in healthcare is moving from early experimentation to a more mature phase. Success is now dependent on trust, validation, and governance. As the industry continues to evolve, the focus is turning to developing a responsible, scalable Gen AI system in healthcare.

Building Responsible Gen AI Systems in Healthcare

Organizations are entering a new phase focused on trust, scalability, and compliance. This evolution emphasizes reliable data grounding, strategic partnerships, and strong governance frameworks to ensure long-term adoption and impact.

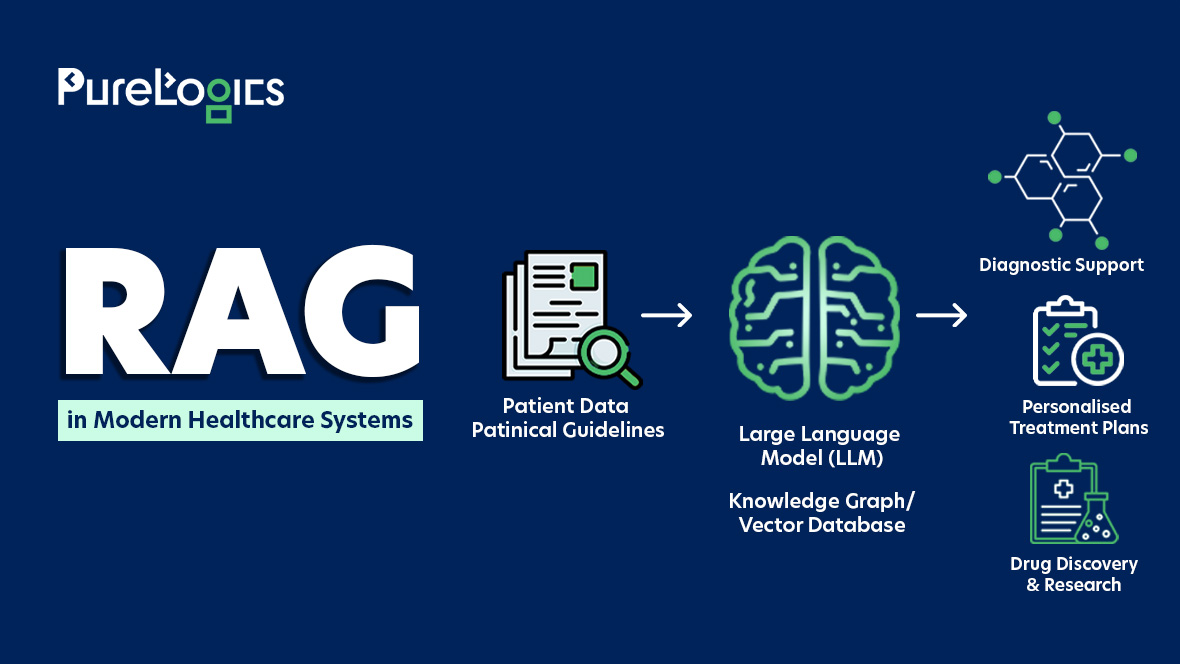

RAG-Based Clinical Support Systems

Traditional LLMs like ChatGPT or Gemini often produce non-evidence-based responses. To effectively address this, the healthcare industry will have to adopt Retrieval-Augmented Generation (RAG) architectures that integrate LLMs with validated internal databases. A multi-institutional study led by researchers at Atropos Health, in collaboration with experts from UCLA, Stanford University, Columbia University, the University of Michigan, UC San Diego, and the Hospital for Sick Children (Toronto), assessed five large language model systems on 50 real-world clinical questions. Nine independent physicians reviewed each response for reliability and clinical relevance. The results revealed that general-purpose models like ChatGPT-4, Claude 3 Opus, and Gemini Pro 1.5 achieved 2-10% evidence-based accuracy, while the RAG-powered system delivered 58% accurate, clinically useful answers.

Partnership Centric Deployment Models

Gen AI implementation is increasingly collaborative. A McKinsey survey of 150 US healthcare organizations across different segments found that 61% are pursuing third-party partnerships for customized GenAI solutions, compared to 20% developing in-house. These partnerships with healthcare software development companies can address challenges in talent, data management, and speed to market, creating an easy path to scale and gain a competitive advantage.

Rising Validation and Regulatory Scrutiny

With the increased adoption of generative AI in healthcare systems, regulatory and validation requirements are growing, too. In the European Union (EU), the Artificial Intelligence Act notes that medical AI systems are at high risk and require strict oversight, such as human-in-the-loop (HITL).

Globally, the World Health Organization (WHO) has published guidance focused on governing AI in healthcare, emphasizing transparency, risk management, data quality, and accountability.

Risk Management as a Differentiator

Risk management provides healthcare organizations with a real edge when building gen AI systems by actively addressing data security, validation, and ethical safeguards. The hospitals and clinics can earn greater trust from clinicians, patients, and regulators. This trust can increase adoption and minimize setbacks caused by compliance issues or technical failures. Healthcare leaders now have to understand that innovation and effective risk management are marks of maturity. This also helps organizations move faster, scale safely, and stand out as dependable leaders in responsible AI.

Ensure Safe & Compliant AI Implementation

Discover how to integrate generative AI into clinical workflows while maintaining compliance, accuracy, and trust.

Takeaway

Generative AI in healthcare is improving tasks such as clinical documentation, patient engagement, workflow management, and drug discovery. At the same time, healthcare organizations must focus on accuracy, privacy, and trust. Leaders who delay integrating gen AI into their workflows risk falling behind. McKinsey notes that many hospitals and clinicians are partnering with healthcare software development companies to build HIPAA-compliant solutions. PureLogics is one such partner, providing expert guidance to ensure safe, efficient, and scalable AI adoption. With a dedicated team of healthcare developers, we design and develop secure, practical solutions tailored to the needs of healthcare organizations. Book a 30 minutes consultation with our experts to know how we can help.

Frequently Asked Questions

Give examples of generative AI applications in healthcare.

Generative AI in hospitals is being used to automate clinical documentation. For instance, AI tools can listen to doctor-patient conversations and generate SOAP-style notes (Subject, Objective, Assessment, Plan), saving clinicians hours of paperwork each week. However, the tools such as Microsoft Nuance DAX Copilot, Amazon HealthScribe, and Google MedLM are already helping clinicians by converting doctor–patient conversations into structured SOAP-style notes and integrating them into EHR systems like Epic and Cerner, saving hours of manual work each week. But these solutions operate as proprietary cloud services, and hospitals with unique documentation formats or integration needs are moving toward developing AI-powered note-generation systems tailored to their workflows.

Is there medical AI like ChatGPT?

Yes, there are AI tools designed and developed for healthcare that work like ChatGPT, but data safety and compliance can be an issue. Moreover, proper safeguards and governance are essential to ensure that AI can be used safely and effectively in clinical settings.

What is the role of generative AI in healthcare?

Generative AI can assist in clinical, operational, and research tasks by automating clinical documentation, generating patient-specific care recommendations, and supporting administrative workflows. Furthermore, it can also aid in drug discovery by analyzing complex data sets and stimulating molecular structures. All in all, it is a highly effective technology that can improve efficiency, reduce clinical workload, and support better-informed healthcare decisions.

[tta_listen_btn]

[tta_listen_btn]

October 27 2025

October 27 2025