API response time is a core element of modern software development, affecting system efficiency, user experience, and business success. Users expect nearly instant responses from services and applications. Slow APIs can cause frustrated users, lost revenue opportunities, and decreased productivity. As a result, enhancing API performance has become a foremost priority for companies across multiple industries.

To efficiently optimize API performance, it’s best to understand APIs clearly. Additionally, access to monitoring performance profiling strategies and tools will help you identify performance challenges and measure optimization attempts. While advanced knowledge within these areas is beneficial, if you follow the outlined strategies, you can implement them seamlessly within your APIs.

Implementing Rate Limiting for Optimized API Performance

Let’s explore the importance of rate limiting in optimizing API speed, the different types of rate-limiting strategies available, and different methods to effectively implement rate-limiting mechanisms.

Types of API Rate Limiting Strategies

Various rate-limiting strategies are useful for developers when optimizing API performance. A few of the most common include:

Token bucket algorithm

This algorithm is a famous rate-limiting approach that uses a token bucket to monitor the request rate. With this technique, tokens are included in the bucket at a pre-fixed rate, and requests are only addressed if tokens are available within the bucket. It will help regulate request flow and prevent the server from getting overwhelmed.

Leaky bucket algorithm

Corresponding to the token bucket algorithm, this algorithm also manages the request rate by buffering them within a bucket. But, in this scenario, there’s a constant rate at which requests are processed, with any additional requests being discarded and overflowing. This technique helps streamline traffic bursts and ensure an ongoing request flow to the server.

Key Strategies for Implementing API Rate Limits

Analyze API usage patterns

Before defining rate limits, it’s essential to analyze the usage of your API patterns and find the average number of requests per client or user. It’ll help you set the right rate limits to handle the expected traffic without creating performance issues or bottlenecks.

- Track API usage patterns

- Monitor request frequencies

- Find peak traffic hours

- Set Rate Limit Tiers

Consider implementing a rate limit tier according to user roles, API endpoints, or subscription plans. By classifying users into various tiers, you can allocate custom rate limits that go with their usage requirements and prioritize resource allocation.

- Divide users according to usage patterns

- Build tiered rate limits for various user groups

- Allocate resources according to user tiers

- Integrate Dynamic Rate Limiting

Dynamic rate limiting includes altering rate limits dynamically according to real-time server load and traffic patterns. You can automatically scale up or down the rate limits using machine learning models and algorithms to enhance resource utilization and ensure steady API performance.

- Use AI algorithms for dynamic rate limiting

- Modify rate limits according to traffic fluctuations

- Enhance resource allocation in real-time

- Transparent Feedback on Rate Limit Violation

When users exceed their rate limits, it’s crucial to communicate accurate status codes or error messages to alert them about the violation. By offering informative feedback, end users can get the reasons for exceeding the limit and take rightful actions to escape future violations.

- Integrate error handling to control rate limit exceeding

- Showcase informative error phrases to users

- Provide suggestions on rate limit compliance

- Analyze & Monitor Rate Limit Use

Regularly analyze and monitor metrics of rate limit usage to find any uncommon patterns or spikes that may hint at potential errors or misuse of API resources. Monitoring data usage can enhance rate limits, proactively address problems, and optimize overall API performance.

- Establish tracking tools for rate limit utilization

- Examine usage metrics for abnormalities

- Integrate proactive measures to avoid abuse

Implementing Caching for Optimized API Performance

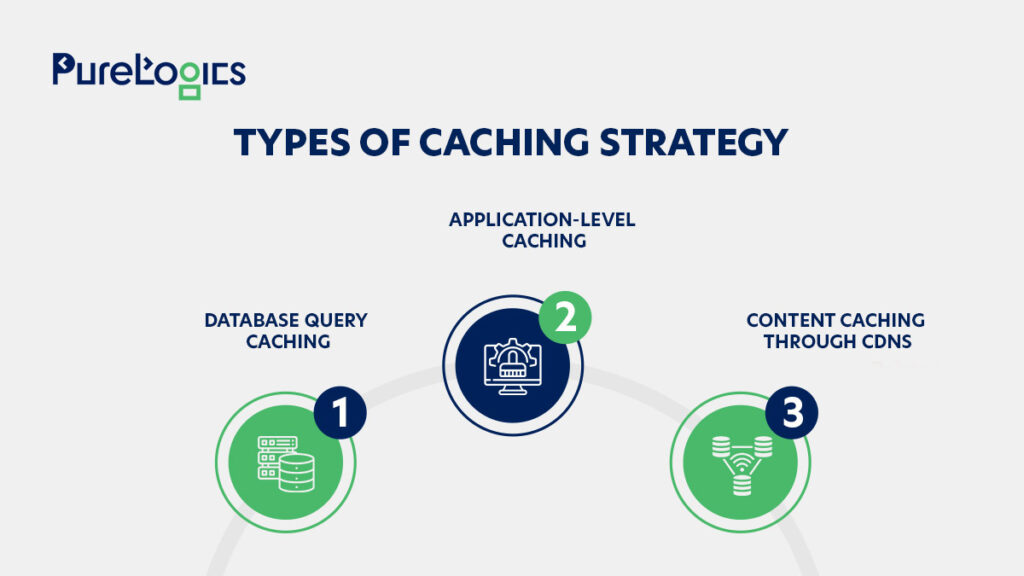

Let’s explore the significance of caching in optimizing API speed, the different types of caching strategies available, and ways to implement effective caching mechanisms.

1. Database query caching

This includes caching the outcomes of database queries to prevent redundant database access. By keeping query results in a dedicated cache or memory, subsequent requests for repeated data can be taken directly from the cache, bypassing any need for expensive database queries. This can enhance API responsiveness and lower database load, especially for text-intensive workloads.

2. Application-level caching

Application-level caching, also identified as memorization, includes strong data in the application’s memory for instant retrieval. This caching type is best suited for storing frequently accessed data shared across various requests and is relatively static, making it simpler to manage and implement.

3. Content caching through CDNs

This caching strategy type involves caching static assets like CSS files, JavaScript libraries, and images at edge locations dispersed globally. CDNs cache data closer to end-users, minimizing latency and enhancing the overall delivery speed of static resources. APIs can emphasize offering dynamic content and processing organizational logic, resulting in quick response times and enhanced performance through offloading static content to CDNs.

Implementing Proven Caching Strategies for Performance

Find cacheable data

Start by identifying suitable data for caching, like static content, computationally expensive calculations, or frequently accessed resources. Not all content is suited for caching, so it’s better to prioritize caching attempts based on the commonly accessed data and its effect on API performance.

Define cache expiration policies

Set cache expiration policies to ensure the data stays up-to-date and fresh. Consider elements like frequency of updates, expiration time window, and data volatility while configuring cache expiration policies. Integrate techniques like invalidation on data updates, cache warming, or time-based expiration to ensure cache consistency and avoid stale data from being given to users.

Monitor and refine caching performance

Persistently track caching performance metrics like eviction rate, cache usage, and hit ratio to evaluate the effectiveness of caching strategies. Enhance caching configurations according to user behavior and performance metrics to ensure maximum advantage from caching and improve cache usage.

Integrate cache invalidation strategies

Integrate cache invalidation mechanisms to ensure that outdated or stale data is eliminated from the cache timely. Use event-driven invalidations, manual cache clearing, or time-based expiration to invalidate data when it turns obsolete or irrelevant. API performance and reliability can be enhanced by managing cache consistency and freshness, improving visitor experience.

Need Faster APIs?

Let our team enhance your app with advanced rate limiting and caching strategies.

Why Should You Adopt Advanced API Techniques?

Here are the top perks of advanced API techniques:

Enhanced Scalability & Performance

Advanced API methodologies such as caching, rate limiting, and throttling make API scalable and faster. Throttling control request rate, ensuring servers from getting overworked. Rate limiting stops users from utilizing too many requests through limiting requests.

For example, e-commerce websites utilize such methods to tackle heavy traffic during peak sales without crashing. Caching keeps data temporarily, so it can minimize server load by retrieving data quickly.

Quality User Experience

Improving APIs makes them respond quickly, which makes end users happier. Caching guarantees that frequently requested data is instantly available. Load balancing distributes the workload among various servers, ensuring performance is steady.

For example, streaming solutions use these methods to avoid buffering, offering a seamless viewing experience.

Operational Efficiency

Advanced API strategies smooth operations by preventing downtime and minimizing server load. Rate limiting and throttling protect servers from excessive requests, and load balancing guarantees effective resource usage.

Financial solutions utilize these strategies to ensure the running of transactions smoothly, enabling user trust. These methods let organizations function reliably and mainly focus on growth.

Concluding Thoughts

Advanced API techniques assist apps in tackling multiple requests safely and smoothly. API connects various software systems. Essential methods such as caching and rate limiting are very crucial.

Rate limiting regulates the requests a user can initiate in a set time. This avoids overload and enables fair use. Caching keeps API responses temporary, minimizing server load and speeding up user response times.

Other methods like caching, throttling, load balancing, monitoring, and authentication also are very useful. Throttling helps slow down excessive requests, and load balancing distributes traffic among servers; monitoring ensures accurate tracking of API health, and authentications guarantee secure access.

These methods make API more secure, faster, and able to tackle more users. They enhance user experience, efficiency, and performance. Developers can build reliable, efficient, and strong apps using these strategies.

At PureLogics, we have a dynamic team of mobile app developers who can develop game-changing apps to help you ramp up your business. With our experienced engineers, agile methodology, and scalable technology, we can seamlessly optimize your business’s API performance. Reach out to us today.

[tta_listen_btn]

[tta_listen_btn]

October 25 2024

October 25 2024