When working with data, there are three approaches to handling privacy. The first is traditional data, which is raw and unaltered, consisting of personally identifiable information that is highly valuable for analysis but extremely vulnerable to breaches. The second one is anonymization, which reduces risk by removing or masking identifiers such as names or IDs; however, attackers can re-identify individuals by combining datasets. The third is the differential privacy (DP), which takes a fundamentally different approach and adds controlled mathematical noise to datasets.

In contrast to traditional methods, DP offers quantifiable guarantees, making it the gold standard for privacy preservation. Some of the major players, such as OpenAI and Microsoft, utilize it.

If you are interested, this blog will explain all about its models, advantages, applications in AI systems, popular libraries, and the challenges you may encounter during implementation.

What Is Differential Privacy?

It is a mathematical standard used to protect personal information by adding noise to the dataset. The noise is the small, random alterations to a dataset, ensuring that individual information cannot be singled out.

For example, when an algorithm evaluates the dataset to compute statistics such as mean, median, or variance. The differential privacy ensures that the data remains private, so that the algorithm cannot tell whether any particular individual’s data is a part of the data based on the results.

What Is Differential Privacy in AI?

It is a mathematical concept that allows aggregate dataset analysis whilst safeguarding the privacy of involved parties. The controlled noise is added to a dataset before training an AI system, making it challenging to extract sensitive information from the query result or the AI model.

How Is Differential Privacy Used in AI?

When applied to AI, differential privacy allows models to learn from sensitive datasets without memorizing information. Noise can be introduced:

- Noise can be introduced to the data before it is used in training the AI.

- The ultimate answers and predictions of the AI can include noise to maintain privacy.

- AI is trained on the data, but it adds noise to the learning steps, so it does not memorize personal information.

Below is a simple Python example illustrating noise addition:

import numpy as np data = np.array([100, 200, 300]) epsilon = 0.5 noise = np.random.laplace(0, 1/epsilon, size=data.shape) private_data = data + noise print(private_data)

Adding a carefully calibrated noise to a dataset ensures that the inclusion or exclusion of any single data point has minimal impact on the results.

Key Parameters of Differential Privacy

Privacy is measured by using two key parameters:

- Epsilon (ε) or the privacy budget indicates the maximum change in output when the individual data is added or removed. A smaller ε offers stronger privacy and can reduce redundancy.

- Delta (δ) represents the likelihood that the privacy guarantee can be violated. A δ close to zero means a very low chance of information leak, and choosing ε and δ involves balancing privacy protection with data utility.

Although DP can be applied in two different ways, let us explain that.

Models of Differential Privacy

It can be applied in two different ways, depending on how and where noise is introduced. The two main models that offer distinct trade-offs between trust, accuracy, and privacy are given below:

Global Differential Privacy (GDP)

It adds more noise to the output of an algorithm that operates on the complete datasets. GDP is used when the aggregator holds the original data and adds noise before releasing query results.

This differential privacy approach relies on a trustworthy aggregator that does not reveal original data or the noise-free results. Moreover, the dependence on a trusted curator in GDP can be a vulnerability point where data sensitivity is high or trust is limited.

Let’s discuss the next model, which is Local Differential Privacy, that adds noise to raw data before sending it.

Local Differential Privacy (LDP)

Before sending it to the data aggregator, the LDP adds noise to the raw data, offering a stronger privacy guarantee, as it does not require individuals to trust a central entity with their raw data.

However, LDP offers enhanced privacy, but it often decreases data utility and accuracy in comparison to GDP. Because noise is applied to each data point independently, the overall signal in the aggregated data can become weaker and require more data to achieve accuracy, similar to GDP.

Advantages of Differential Privacy

Below are the advantages of using differential privacy in a project:

- Quantifiable level of privacy: The differential privacy techniques ensure a quantifiable privacy level, whereas other anonymization methods can be vulnerable to re-identification attacks.

- Protection from linkage attacks: It preserves the utility of data for aggregate analysis, allowing organizations to extract insights without compromising individual privacy.

- Compositional data security: Differential privacy is vital for privacy risk management, as some privacy techniques adjust to the accuracy of query results, depending on the query’s privacy requirements.

- Compliance: It helps organizations comply with data protection regulations, such as the GDPR.

These advantages make differential privacy a trusted technique used in various industries.

Uses of Differential Privacy In Various Industries

This privacy technique holds the key to enhancing sensitive data analysis, which is why various industries, such as healthcare and finance, utilize it.

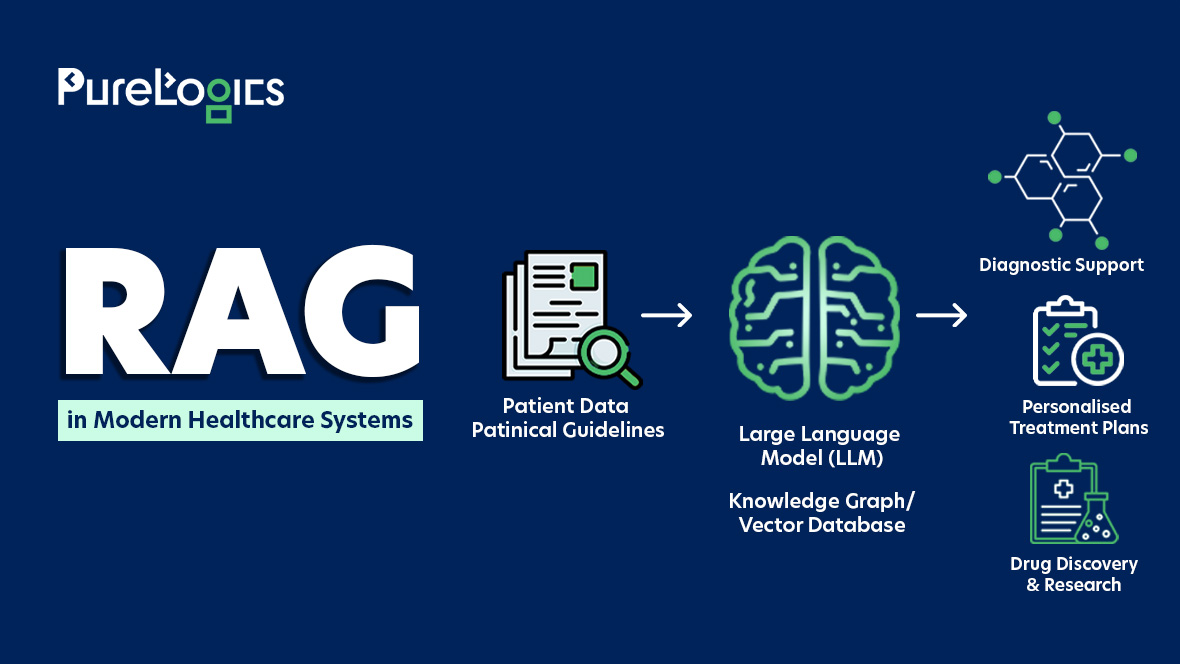

- Healthcare: It allows the analysis of patient records while ensuring confidentiality. Additionally, the researchers use it when training AI models on medical images, empowering them to learn without exposing private health information.

- Finance: In this industry, it is used in fraud detection and secure data sharing, gaining insights from transaction data without putting financial details at risk.

- Government: It is primarily used by government agencies to safeguard sensitive information, such as census data, while releasing demographic information for public use.

While differential privacy benefits multiple industries, it plays a crucial role in AI systems, enabling models to learn from data while preserving individual privacy.

Uses of Differential in AI Systems

Differential privacy enables AI systems to learn from data while maintaining the confidentiality of individual information. Here are some practical uses:

- Training Large Language Models (LLMs): Secure sensitive text data like medical records or personal conversations so the AI cannot memorize private information.

- Image classification: Keeps the personal or medical images secure whilst training AI models for tasks like disease detection or facial recognition.

- Web optimization: Analyze user behaviour for search engines or voice queries, keeping individual user activity safe.

To make these AI applications possible while preserving privacy, specialized libraries that integrate differential privacy can be used.

Popular Libraries for Differential Privacy in AI

There are several Python libraries that help implement differential privacy in AI and ML models, such as:

- TensorFlow Privacy: Integrates DP with TensorFlow models, including support for differentially private stochastic gradient descent (an algorithm that trains AI models step by step using random data samples for efficiency).

- PyDp: Python wrapper for Google’s Differential Privacy library, allowing easy DP integration in Python workflows.

- Opacus: A PyTorch library for training deep learning models with built-in differential privacy features.

While these libraries make implementation easier, applying DP can still be challenging, and we’ll tell you why.

Challenges of Implementing Differential Privacy in AI

Indeed, differential privacy is powerful, but implementing it comes with practical difficulties, and some of them are given below:

- Balance between privacy and accuracy: Adding noise can safeguard data, but can reduce model performance or prediction accuracy.

- Complex parameter tuning: Selecting the right privacy budget t (ε) and δ can be challenging and requires balancing privacy with usability.

- Computational overhead: Deep learning with differential privacy can increase training time and resource requirements.

- Less data utility: High levels of noise can make datasets less useful for analysis, particularly with smaller datasets.

- Integration complexities: Including it in the existing AI pipelines may demand heavy changes to algorithms and workflows.

- Limited expertise: The specialized knowledge is required for the correct implementation, which can be hard to find.

Indeed, implementing differential privacy can be hard, but you do not have to do it alone. PureLogics can help design and implement AI solutions that safeguard sensitive data while delivering actionable insights. With 20+ years of experience years of experience we specialize in:

- Ensuring regulatory compliance without any compromise on model performance.

- Optimize AI systems to balance privacy and accuracy.

To know more, book a 30-minute free consultation with our AI experts.

Frequently Asked Questions

What is the main goal of differential privacy in machine learning?

The main goal of differential privacy is to protect individual data while still allowing useful insights to be learned from a dataset. In machine learning, it ensures that the presence or absence of any person’s data does not affect the model’s output. This way, organizations can train models and share results without exposing information about individuals.

What is differential privacy in LLM?

It is a technique used to train or fine-tune large language models while ensuring that sensitive information from the training data cannot be traced back to the person. It adds controlled noise during training so the model can learn general patterns without memorizing or revealing private details like names, addresses, or confidential records.

How does differential privacy enhance AI security?

In AI security, differential privacy is a technique that secures sensitive information while allowing AI systems to learn from data. It works by including controlled randomness, making it impossible to trace an individual’s data within the model. This minimizes the risk of data leaks and prevents re-identification attacks.

[tta_listen_btn]

[tta_listen_btn]

October 2 2025

October 2 2025