Despite companies pouring $30–40 billion into generative AI, most pilot projects still fail to deliver meaningful results. According to the MIT Media Lab’s study titled, The State of AI in Business 2025, 95% of AI initiatives generate no measurable returns. This study, which analyzed over 300 public projects, conducted 52 organizational interviews, and collected 153 executive surveys at four major conferences, highlights a critical truth: AI success is not just about technology, but also about strategy and avoiding the common pitfalls that can doom a project from the start.

In this blog, we will discuss these pitfalls, since green-lighting a project just because it involves AI is not enough. Let’s start with the first and most common one, which is the implementation of AI without considering the business problem.

Four Critical Pitfalls Undermining AI Product Success

Most AI products fail not because of technology limitations, but because of four preventable mistakes. Therefore, understanding these pitfalls can help reduce the difference between wasted investment and a competitive advantage.

1. Building Technology Solution Looking for Business Problems

Enterprises have a long history of rushing towards the next big thing (remember the blockchain, metaverse, and Web 3). The same MIT study mentioned above also points out that roughly 50%-70% of AI budgets are allocated to superficial AI projects with little to no deeper value.

In short, one of the most common and costly mistakes enterprises make with AI is starting with the wrong question. Rather than asking “What problem costs us the most?” companies often ask, “What can AI do?”. This shift in mindset, focusing more on AI capabilities than on actual business pain points, results in an AI solution that is technically impressive but rarely solves the issues that matter most to customers. Below are some cross-industry examples to further strengthen our point.

- Fintech: AI systems are shown to improve fraud detection (in some cases, 2% vs 8% increase in detection from traditional systems). However, deploying AI without careful design can backfire, resulting in high false positives and even missed fraud cases.

- Retail: AI can reduce excess inventory by up to 30% and enhance forecast accuracy; however, if retailers deploy it without considering practical constraints, the same technology can create gaps and false alarms. For instance, Walmart deployed an AI-powered shoplifting detection system, and employees reported that it misidentified normal behavior as theft and often failed to stop actual theft (source: wired.com).

- Healthcare: The adoption of AI in healthcare systems can increase efficiency, but improper implementation can lead to security breaches and bias. A study of 2,425 U.S. hospitals using predictive AI tools found that 44% were biased.

The case studies above clearly show that the problem wasn’t with the AI technology itself, but with how it was used. Without a clear definition of the business problem and proper alignment of model training and data to that goal, even advanced AI systems fail to deliver value. As a leader, your focus should be on asking the right questions: What problem are we solving, and does our data truly represent it? Plus, take an AI initiative supported by a documented business case. The case should identify pain points, define measurable success metrics, and ensure alignment between technological capabilities and business needs.

2. Avoiding Governance & Data Infrastructure Requirements

Many organizations adopt AI projects without assessing whether the data is clean, integrated, or governed properly, which leads to failed projects, delays, and wasted resources. It is essential to remember that AI models are only as accurate as the data they rely on, and inadequate data infrastructure or weak governance can yield inaccurate and biased results, even if the AI itself is sophisticated. The following cross-industry examples will further elaborate on this point.

- Financial services: Legacy banking systems can slow down real-time AI for fraud detection, leading to increased false positives, missed frauds, and regulatory risk, as seen with NatWest Group. Their data for 20 million customers was distributed across multiple systems, resulting in delays in detecting fraud. The bank earlier this year decided to consolidate its data to support faster, real-time AI-fraud detection. They are already seeing promising results, with their AI system’s scam detection improving by 71%. (Financial Times).

- Healthcare: Without standardization of patient records, lab results, and imaging data stored across different systems, AI predictions can become unreliable, compromising patient safety. The study titled “Dissecting racial bias in an algorithm used to manage the health of populations” evaluated the healthcare algorithm that assigned risk scores to patients across U.S hospitals, found the model produced racial bias by using healthcare costs as a proxy for patient health, which underestimated the medical needs of Black patients because they historically receive less care than white patients.

- Retail: Fragmented data (across sales, inventory, supply chain, and sales) can mislead AI-driven demand forecasts, leading to stockouts, overstocks, or revenue loss. For example, Walmart had inconsistent inventory and sales data, resulting in inaccurate AI forecasts. The giant decided to standardize their data, and soon their AI-driven forecasting improved, identifying and correcting discrepancies, inefficiencies, or inaccuracies in supply‑chain models (Source: tech.walmart.com)

These examples helped you understand the importance of data governance, and below are a few steps you can take as an executive on an immediate basis to prevent this one of the most common pitfalls in AI product development.

- Conduct a data readiness audit before starting an AI project.

- Make sure the data supports AI, meaning it’s clean, integrated, and structured.

- Maximize accountability and compliance by applying strong data governance to ensure security and integrity.

3. Addressing Compliance as a Post-Development Activity

Another common pitfall is treating compliance as a post-development activity, which is a recipe for regulatory risk. This can lead to costly redesigns and reputational damage. Moreover, early engagement can ensure AI products are legally sound and market-ready. Let us give you cross-industry examples and studies to further strengthen the case in point.

- Healthcare: Treating compliance as an afterthought, especially in an industry with laws like HIPAA, can lead to regulatory complexities, fines, and, worst of all, risk to patient health. A review-based study done last year titled “Navigating regulatory and policy challenges for AI-enabled combination devices” found that AI devices without early regulatory planning can create risks like biased outputs, potentially compromising patient safety.

- Financial services: Deploying AI in financial services without embedding compliance from the very start can lead to bias in lending decisions and costly model redesign. Even the Consumer Financial Protection Bureau (CFPB) issued supervisory guidance this year after examining institutions that used AI/ML credit‑scoring models and found potential risks to the Equal Credit Opportunity Act (ECOA) and Regulation B. The CFPB emphasised that even advanced models must meet fair‑lending and transparency requirements, and that banks cannot ignore compliance before deploying AI.

- Retail: AI deployed without compliance in retail can create fairness issues, regulatory scrutiny, and damage to consumer trust. In fact, the EU consumer protection and fairness laws require that pricing practices should be transparent and not discriminate against consumers.

Make Compliance Part of Your AI Journey

Don’t treat compliance as an afterthought and integrate it from day one to avoid costly setbacks.

Here’s what you can do as an executive: first, create a team, including legal experts, compliance specialists, and a data scientist, to monitor AI projects. You can hire an AI solution development company, as they already have deep knowledge and expertise in legal and compliance matters.

4. Deploying AI Without Explainability or Validation

Deploying AI without explainability and validation can convert the powerful models into untrustworthy black boxes, exposing organizations to biased outcomes and regulatory issues. Let us provide you with cross-industry examples to illustrate this concept effectively.

- Healthcare: AI without explainability is risky for patients, as researchers from the University of Galway, in a study titled “Unveiling the Black Box: A Systematic Review of Explainable Artificial Intelligence,” analyzed medical images and found that while AI models often achieve high accuracy, their black-box nature limits clinician trust. The study further highlighted the lack of standardization and evaluation metrics, emphasizing the need for explainability, validation, and interpretability in AI tools from the start.

- Financial services: Deploying AI without explainability in finance can lead to models that lack fairness assurance. That’s why the CFA Institute’s report warned that complex AI systems in credit scoring and underwriting are vague and emphasized the need for explainability.

- Retail: Integrating AI without transparency can lead to opaque, and unaccountable decisions. For example, SafeRent Solutions used an AI tenant screening system that did not explain the reasons behind its decisions (especially for Black and Hispanic applicants). This case highlights that the lack of explainability and validation can lead to biased outcomes, resulting in organizational damage.

If you are thinking about what you can do as an executive, then start every AI initiative with a clear validation and interpretability strategy. Plus, ensure the rationale for decisions is clearly defined and validate model data against real-world data.

Let us give you a strategic framework that can help you make your AI products successful and avoid common pitfalls.

A Three-Phase Governance Model for AI Product Success

The table outlines a three-phase governance model for making AI products successful, highlighting the necessary actions and timelines for each stage.

| Stage | Time | Key Activities |

|---|---|---|

| Strategic Alignment | 3–6 months | – Risk assessment – Data readiness audit – Regulatory pathway mapping |

| Disciplined Development | Development cycle | – Continuous user feedback – Explainability requirements – Change management strategy -Embedded compliance review |

| Adoption | Post Deployment | – ROI measurement – Performance monitoring – User feedback mechanism – Continuous validation against outcomes |

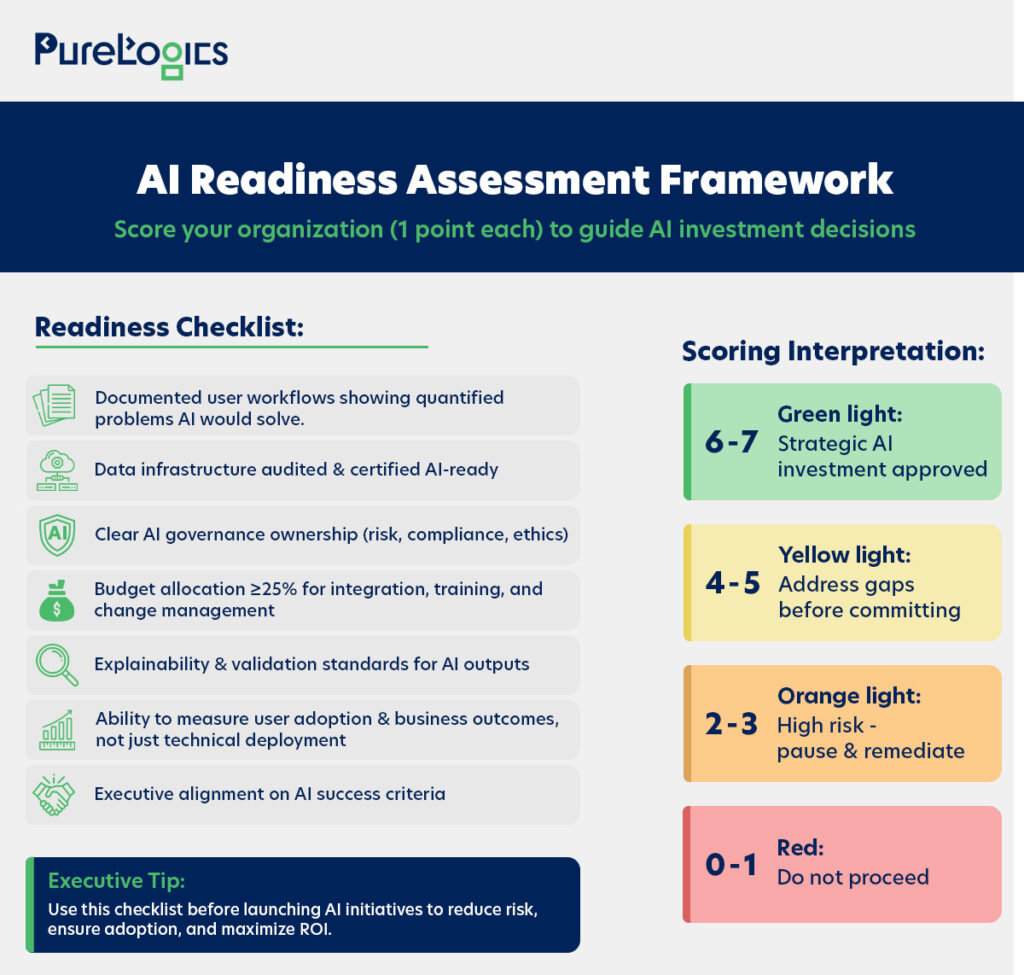

You can also assess whether your organization is ready for AI implementation using our readiness assessment framework, provided below.

Overcome Common Pitfalls to Build Sustainable AI Systems

Successfully deploying AI products requires sophisticated capabilities that many organizations lack in-house, including expertise in ML/AI, a deep understanding of compliance architecture, and industry-specific knowledge. That’s why strategic partnerships are proving to be a key differentiator as 61% companies say they intend to pursue partnerships with third-party vendors to develop AI solutions (McKinsey, 2025 survey), such as PureLogics has been building with enterprise clients who need to bridge the gap between AI ambition and production-ready systems over the last two decades.

Book a 30-minute free consultation with our experts, and let’s discuss your AI roadmap.

Frequently Asked Questions

What is a common reason for AI project failure?

One of the most common reasons for AI project failure is that businesses do not fully understand their pain points or the actual problems they are trying to solve. According to the MIT Media Lab, companies waste 50–70% of their budgets on superficial projects that deliver little to no real value.

How does an organization assess its readiness for AI product development?

Organizations can use the Executive Readiness Assessment framework mentioned in the blog above to evaluate critical factors, including documented user workflow, change management, and an adequate budget etc.

Why is AI implementation different from other technology products?

AI, unlike conventional projects, requires a clean data infrastructure, early compliance integration, and decision explainability, as poor data can create regulatory risks and undermine user trust. Thus making development more complex than typical tech deployments.

[tta_listen_btn]

[tta_listen_btn]

November 13 2025

November 13 2025