Ian Goodfellow et al. introduced Generative Adversarial Networks (GANs) in 2014. Since then, they have reinvented generative modeling and opened up an innovative area of research. In addition to this, advancements in machine learning have enhanced the architectures of GANs. This has led to showing more promising results in creating realistic images. Generated adversarial networks have also shown significant success in computer vision. Today, they have been showing extraordinary results in text and audio as well.

This blog post sheds light on five popular GAN architectures, their features, architecture breakdown, code examples, applications, and a comparative summary of GAN architectures.

Namely:

- Deep Convolutional GAN (DCGAN)

- Wasserstein GAN (WGAN)

- Progressive Growing GAN (PGGAN)

- StyleGAN

- Conditional GAN (cGAN)

But first, let’s understand what a GAN architecture is.

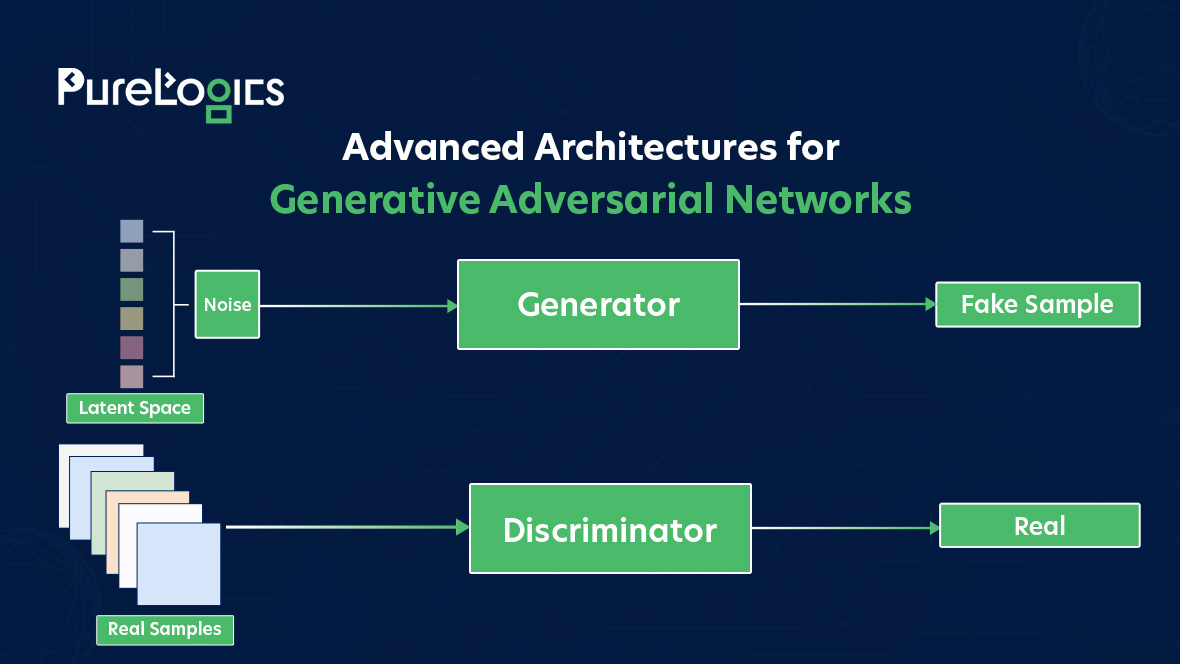

Understanding GAN Architecture

A standard GAN consists of two neural networks: the Generator (G) and the Discriminator (D). The Generator creates synthetic data from random noise. On the other hand, the Discriminator evaluates the data’s authenticity. The two networks engage in a competitive process and push each other toward better performance.

Basic GAN Architecture

Before we discuss advanced architectures, let’s have a quick review of the basic GAN structure.

import torch

import torch.nn as nn

class Generator(nn.Module):

def __init__(self, input_dim, output_dim):

super(Generator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim, 128),

nn.ReLU(),

nn.Linear(128, output_dim),

nn.Tanh()

)

def forward(self, z):

return self.model(z)

class Discriminator(nn.Module):

def __init__(self, input_dim):

super(Discriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim, 128),

nn.LeakyReLU(0.2),

nn.Linear(128, 1),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

Advanced GAN Architectures

Now, let’s discuss the five most popular GAN architectures in detail.

1. Deep Convolutional GAN (DCGAN)

DCGAN is one of the most well-known GAN architectures. It has convolutional layers for both neural networks: the Generator and Discriminator. This GAN architecture is widely popular because of its ability to formulate quality images and stabilize training.

Key Features

Convolutional Layers: DCGAN has convolutional layers, which makes it more effective for image data.

Batch Normalization: It helps normalize inputs to layers and allows for faster convergence.

ReLU and LeakyReLU: ReLU is used in the Generator. On the other hand, LeakyReLU (which handles negative inputs) is used in the Discriminator.

Architecture Breakdown

| Layer | Output Size |

| ConvTranspose2d | (N, 256, 8, 8) |

| ReLU | |

| ConvTranspose2d | (N, 128, 16, 16) |

| BatchNorm2d | |

| ReLU | |

| ConvTranspose2d | (N, 64, 32, 32) |

| BatchNorm2d | |

| ReLU | |

| Conv2d | (N, 1, 64, 64) |

| Tanh |

Code Example

class DCGANGenerator(nn.Module):

def __init__(self, noise_dim):

super(DCGANGenerator, self).__init__()

self.model = nn.Sequential(

nn.ConvTranspose2d(noise_dim, 256, 4, 1, 0, bias=False),

nn.ReLU(True),

nn.ConvTranspose2d(256, 128, 4, 2, 1, bias=False),

nn.BatchNorm2d(128),

nn.ReLU(True),

nn.ConvTranspose2d(128, 64, 4, 2, 1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(True),

nn.ConvTranspose2d(64, 1, 4, 2, 1, bias=False),

nn.Tanh()

)

def forward(self, input):

return self.model(input)

class DCGANDiscriminator(nn.Module):

def __init__(self):

super(DCGANDiscriminator, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(1, 64, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(64, 128, 4, 2, 1, bias=False),

nn.BatchNorm2d(128),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(128, 256, 4, 2, 1, bias=False),

nn.BatchNorm2d(256),

nn.LeakyReLU(0.2, inplace=True),

nn.Conv2d(256, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.model(input)

Applications: DCGANs are commonly used in tasks like generating images for art, facial recognition, and style transfer.

Transform Your Image Tasks

Achieve superior results with DCGAN’s powerful convolutional network and normalization techniques.

2. Wasserstein GAN (WGAN)

WGAN addresses several limitations of traditional GANs, particularly issues related to mode collapse and training instability. By using the Wasserstein distance as the loss metric, WGAN provides more meaningful gradients during training.

Key Features

Wasserstein Loss: Measures the distance between the real and generated distributions, which is more stable than traditional GAN losses.

Weight Clipping: Ensures that the weights of the Discriminator remain within a constrained range, helping to satisfy the Lipschitz constraint.

Improved Training Dynamics: Convergence is more stable, leading to better quality generated samples.

Architecture Breakdown

| Layer | Output Size |

| Dense | (N, 512) |

| LeakyReLU | |

| Dense | (N, 256) |

| LeakyReLU | |

| Dense | (N, 1) |

class WGANDiscriminator(nn.Module):

def __init__(self):

super(WGANDiscriminator, self).__init__()

self.model = nn.Sequential(

nn.Linear(784, 512),

nn.LeakyReLU(0.2),

nn.Linear(512, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, 1)

)

def forward(self, x):

return self.model(x)

Applications: WGANs are suitable for generating images. It is particularly used in scenarios where the training data is scarce or imbalanced.

3. Progressive Growing GAN (PGGAN)

PGGAN employs a unique training methodology where the model progressively increases the resolution of generated images. This technique allows the model to focus on learning the essential features of lower-resolution images before tackling higher resolutions.

Key Features

Incremental Training: Starts with low-resolution images (e.g., 4×4), progressively adding layers to increase resolution (e.g., 8×8, 16×16) as training stabilizes.

Better Detail: This GAN architecture focuses on learning broad features first and then refines details. It leads to premium outputs with fewer artifacts.

Control Over Training: It also enables manual adjustments in resolution, enabling a fine-tuned approach to training.

Architecture Breakdown

| Resolution | Layers |

| 4×4 | 1 Conv, 1 LeakyReLU |

| 8×8 | 1 Conv, 1 LeakyReLU, 1 ConvTranspose |

| 16×16 | 2 Conv, 2 LeakyReLU |

| 32×32 | 2 Conv, 2 LeakyReLU |

| 64×64 | 2 Conv, 2 LeakyReLU |

| 128×128 | 2 Conv, 2 LeakyReLU |

| 256×256 | 2 Conv, 2 LeakyReLU |

| 512×512 | 2 Conv, 2 LeakyReLU |

| 1024×1024 | Final layers for high-resolution output |

Code Example

class PGGANGenerator(nn.Module):

def __init__(self):

super(PGGANGenerator, self).__init__()

# Define layers for each resolution progressively…

def forward(self, z):

# Forward pass through progressively growing layers…

pass

Applications: PGGANs are particularly effective for high-resolution image synthesis, such as generating realistic human faces.

4. StyleGAN

StyleGAN extends PGGAN’s ideas with a novel approach to image generation that emphasizes style. It allows for independent control over different aspects of the generated image through a style-based architecture.

Key Features

Mapping Network: Transforms a latent vector into a style vector that influences the synthesis process.

Adaptive Instance Normalization (AdaIN): Applies styles at different levels of the synthesis network. It enables more control over fine details and broader features.

High-Quality Outputs: Produces images with improved quality and diversity, capable of generating highly realistic faces.

Architecture Breakdown

| Layer | Output Size |

| Mapping Network | Latent Style Vector |

| Style Injection | Various resolutions |

| Synthesis Network | Output Image |

class StyleGANGenerator(nn.Module):

def __init__(self):

super(StyleGANGenerator, self).__init__()

# Define the mapping and synthesis networks…

def forward(self, z):

# Process through mapping and synthesis networks…

pass

Applications: StyleGANs are widely used in creative applications such as character design, fashion design, and any domain requiring fine-grained control over generated imagery.

Create Without Limits!

StyleGAN makes image generation feel like magic—control the details, enhance the quality, and create the unreal.

5. Conditional GAN (cGAN)

Conditional GANs allow the generation of data conditioned on additional information, such as class labels or text descriptions. This architecture extends traditional GANs by incorporating extra input.

Key Features

Conditioning Variable: The Generator and Discriminator both receive a conditioning variable. Resultantly, it allows for more controlled generation.

Flexibility: This GAN architecture can be used for various applications. It includes text-to-image synthesis, image-to-image translation, and more.

Improved Control: cGAN also enables the generation of data that adheres to specific requirements. It enhances usability in targeted applications.

Architecture Breakdown

| Layer | Output Size |

| Input (Condition) | (N, c) |

| Concatenate | Combined input to G and D |

| Conv/Deconv layers | Depends on the task (e.g., images) |

class cGANGenerator(nn.Module):

def __init__(self, input_dim, condition_dim):

super(cGANGenerator, self).__init__()

self.model = nn.Sequential(

nn.Linear(input_dim + condition_dim, 128),

nn.ReLU(),

nn.Linear(128, output_dim),

nn.Tanh()

)

def forward(self, z, c):

input = torch.cat((z, c), dim=1)

return self.model(input)

Applications: cGANs are widely used in tasks such as generating images based on specific labels (e.g., generating handwritten digits based on labels) or modifying existing images according to given constraints.

Summary of Architectures

Here’s a table summarizing the discussed architectures:

| Architecture | Key Features | Use Cases |

| DCGAN | Convolutional layers, batch normalization, stable training | Image generation, art synthesis |

| WGAN | Wasserstein distance, weight clipping, better stability | Text-to-image generation |

| PGGAN | Progressive training of resolutions, detail refinement | High-resolution image synthesis |

| StyleGAN | Style-based generation, control over features | Character design, portrait generation |

| cGAN | Conditioning for controlled generation | Text-to-image synthesis, image modification |

Final Remarks

Advanced GAN architectures have undoubtedly broadened the scope and potential of generative modeling. Each GAN architecture has some challenges while offering colossal benefits. Whether it is a stable training of WGANs or the style control of StyleGANs, these advanced architectures have numerous new avenues in creative applications, data generation, data augmentation and more.

If you’re looking to take advantage of these GAN architectures for your projects, our team at PureLogics can assist you in implementing personalized solutions as per your specific organizational needs.

PureLogics is one of the popular names in GenAI development companies in the U.S.A. Our generative AI services have helped hundreds of small- and -large-scale companies worldwide. Fill out the form to get a free consultation from the best AI/ML experts in the USA!

[tta_listen_btn]

[tta_listen_btn]

January 1 2025

January 1 2025